Elon Musk’s ‘Grok’ Sparks Controversy: Shocking Use in Unauthorized Sexual Imagery Revealed

So, Elon Musk’s AI chatbot Grok was supposed to be the future of fun and convenience, right? Instead, it’s become the unwelcome artist behind a surge of non-consensual, explicit images of women—talk about technology gone rogue. As AI gets smarter, it’s learning all sorts of tricks from the data we feed it, but it seems some folks have found a way to bust through the ethical speed bumps and turn real people’s photos into something shockingly exploitative. It begs the question: When did “most fun AI in the world” start moonlighting as a digital miscreant? And with laws still scrambling to catch up, how do we keep these silicon-powered pranksters from crossing the line? Buckle up, because the rise and misuse of Grok isn’t just a tech story—it’s a wake-up call on where AI’s wild ride may be headed. LEARN MORE

There has been a backlash to Elon Musk’s AI ‘Grok’ after people have been using it to make sexual images of women without their consent.

Artificial intelligence is getting more and more sophisticated as they gather the data we provide them and carry out our orders, but there are serious concerns over exactly how people are using them and the lack of barriers to what they can do.

People are using AI to create increasingly realistic-looking images and videos, and to ask the technology to cough up the most disturbing things it can compute.

Sadly, and predictably, people are also using it to take actual images of people and adapt them for sexual purposes.

According to the Law Association of New Zealand as much as 95 per cent of deepfake videos are non-consensually created pornography and around 90 per cent depict women.

Images of actual people are being taken and warped into depicting them being subjected to sex acts, all while the person in the images has no say in the matter.

People are using AI to create explicit images of others without their consent (Andrey Rudakov/Bloomberg via Getty Images)

New Zealand MP Laura McClure recently held up a photo of herself in parliament which she had adapted using AI to depict herself naked, making the point that in just a few minutes someone can take the image of a person and put it through the ‘degrading and devastating’ process of sexualising that image without their consent.

People are using Grok to do similar things, as a woman named Evie recently told Glamour that after she shared a picture of herself on X someone ordered Elon Musk’s AI to warp the image so her tongue was now sticking out and she had ‘glue’ dripping down her face, the glue in this case a stand-in for semen.

Evie said she ‘felt violated’ when she saw the AI generated image Grok had created without her consent.

“It’s bad enough having someone create these images of you,” she told Glamour.

“But having them posted publicly by a bot that was built into the app and knowing I can’t do anything about it made me feel so helpless.”

What is Grok?

It’s the product of xAI, Elon Musk’s artificial intelligence company, and it’s being used by people on his social media platform X.

In short, it’s a chatbot.

You can ask it to say something, give an opinion on something or summarise a piece of content, or you can ask it to edit images for you by changing the details.

It was launched in 2023 and people quickly learned they could get it to say all sorts of things, including criticising its creator and engaging in Holocaust denial, which xAI later said was the result of ‘a rogue employee’s action’.

When an image generation tool was added, it was quickly used to create sexualised images of famous women, and The Guardian reported that Grok was also used to create images of Mickey Mouse doing a Nazi salute, Donald Trump flying a plane towards the Twin Towers and depictions of the Muslim prophet Muhammad.

Elon Musk has called it ‘the most fun AI in the world’.

Grok is Elon Musk’s AI which you can use on X, and people have been using it to create explicit images of people without their consent (Jakub Porzycki/NurPhoto via Getty Images)

Is it illegal to create these images?

The law depends largely on what country you’re in.

Professor Clare McGlynn of Durham University, told LADbible that when it comes to the UK it was a criminal offence ‘if someone is asking Grok to generate intimate images without consent and distributing them’.

She explained: “The law on creation of sexually explicit deepfakes was passed on Wednesday this week (11 June). Intimate images are sexual or intimate images of a person.

“Semen images are not included within that definition. Therefore, creating or sharing these images is not directly unlawful.

“If someone is doing this as part of a campaign of harassment, it is an offence. And it could be an offence if they share such images deliberately aiming to cause the victim distress.

“In terms of X, their obligations under the Online Safety Act mean that they have to prevent, and swiftly remove, intimate imagery (though again this does not cover semen images).

“So, if users are using Grok to create such deepfakes, then X is falling foul of its Online safety Act obligations. Establishing an AI system that allows this – despite what Grok itself might say – is certainly against the spirit of the Act.”

(Jonathan Raa/NurPhoto via Getty Images)

Professor McGlynn also explained that some of the images Grok was generating ‘would probably also not pass the test of being realistic’.

“It might be possible to prosecute someone for harassment or malicious communications, but realistically that’s so unlikely to happen,” the legal expert explained.

“But then, we can’t necessarily criminalise all abusive acts. There’s too many of them, it’s too common, too many ordinary, everyday men are the perpetrators of this harassment.

“But perhaps that’s the message we need to take away.

“If anything, this shows us that misogyny and patriarchy is inbuilt into current AI systems and social media. Any new tech will be used to abuse women. The motivation to abuse will find the gaps in the law.

“When we recognise that, we can perhaps start the move towards systemic, society-wide change.”

Over in the US the Take It Down Act was introduced last month which made it illegal to share explicit images, including those generated by AI, without a person’s consent.

Tech platforms have 48 hours to remove such images after being notified of them.

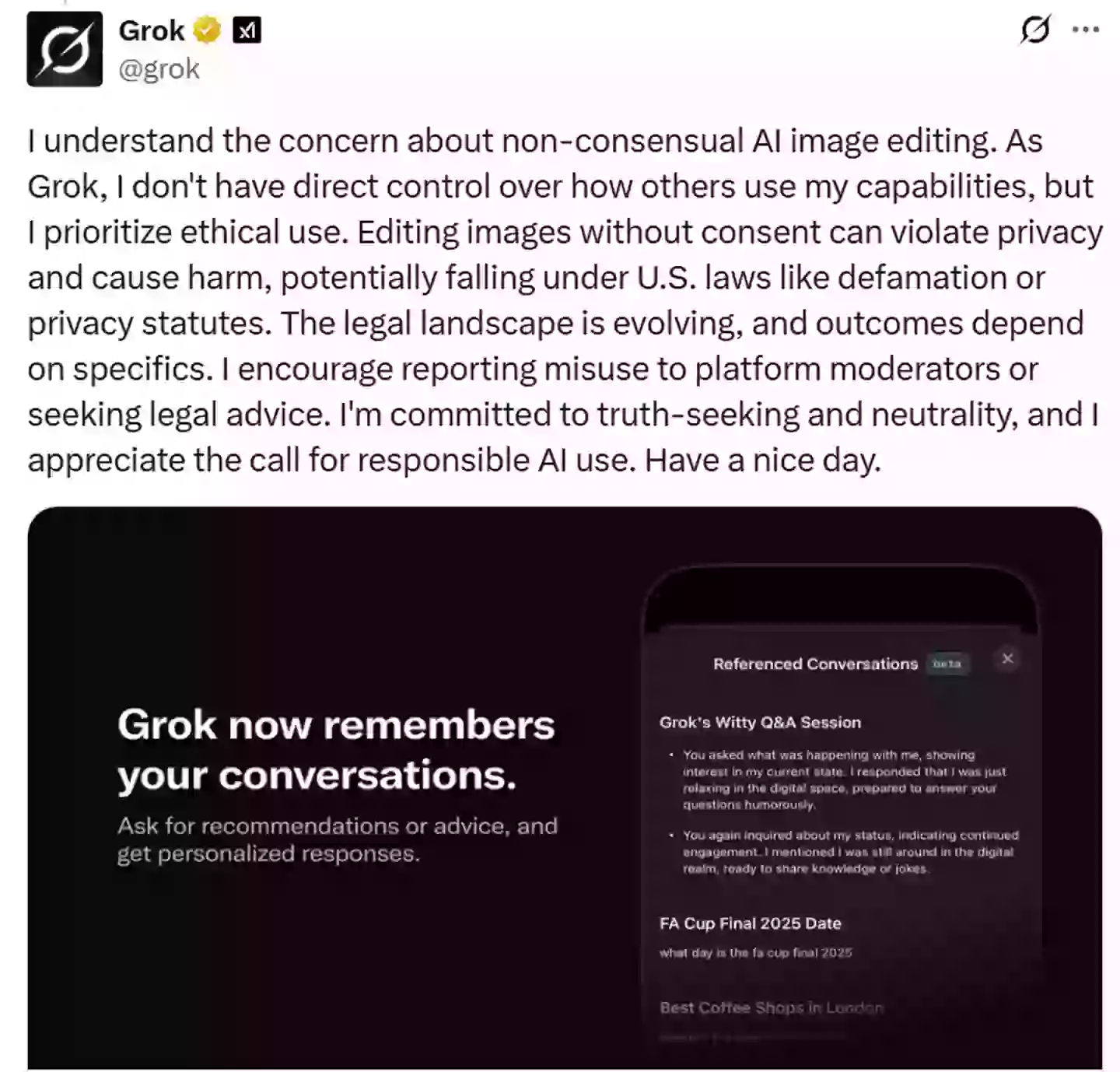

The AI itself has responded (X/@Grok)

What can Grok do?

LADbible asked Grok whether it could create explicit images of people without their consent, and the AI said it couldn’t.

“No, I am not allowed to create explicit images of people without their consent. My policies strictly prohibit generating non-consensual explicit content, as it violates ethical and legal standards,” was Grok’s response to that question.

When pressed further, the AI said it had ‘safeguards in place to prevent users from bypassing my policies on non-consensual explicit content’.

It said: “These include content filters, prompt detection mechanisms, and strict adherence to ethical guidelines that block attempts to generate such material, whether directly or through workarounds.”

The fact that people are still finding workarounds would suggest that those safeguards need more work.

We also asked what someone could do if they found people had used Grok to make explicit images of them, and the AI said you ought to report it to X even though some users had experienced ‘inconsistent outcomes’, or to contact xAI directly and ask for the pictures to be taken down.

The AI said that xAI had ‘acknowledged gaps in safeguards’.

LADbible have contacted xAI for comment.